Cilium datapath梳理

1. Cilium datapath的组成

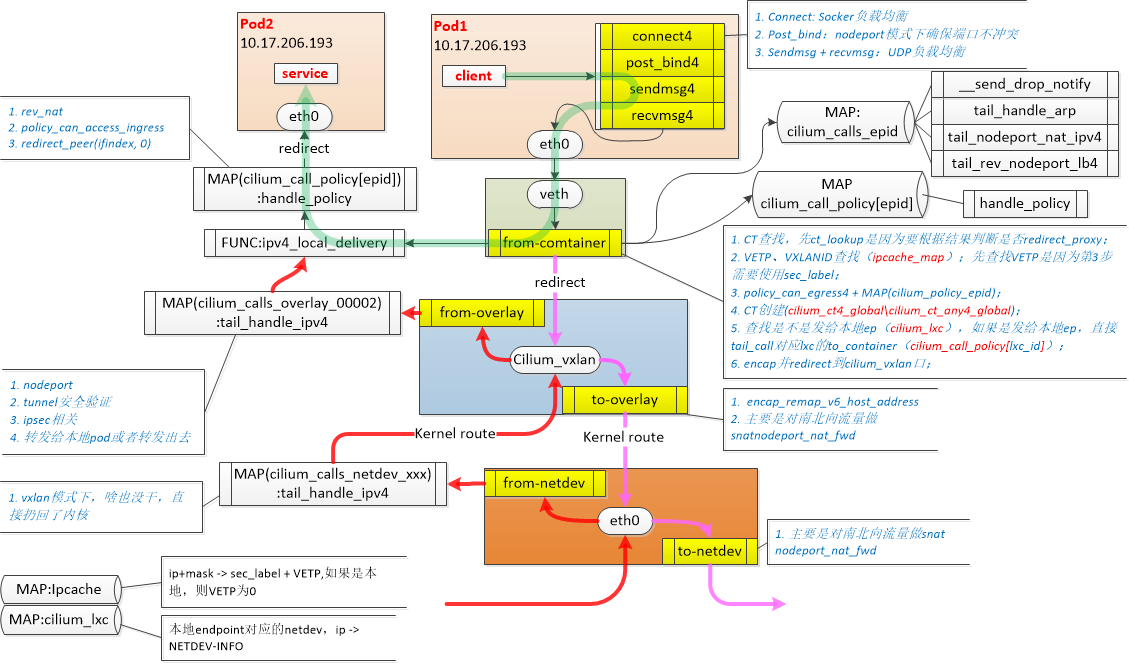

1.1 Cilium中的流量劫持点

所以流量劫持从prog类型上可以分为:

- 内核层面基于sock的流量劫持,主要用于lb(k8s-proxy);

- 基于端口流量劫持,实现整个datapath的替换;

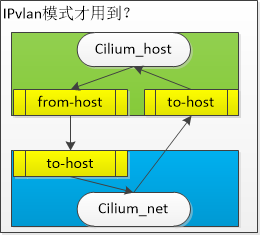

置于cilium_host和cilium_net:

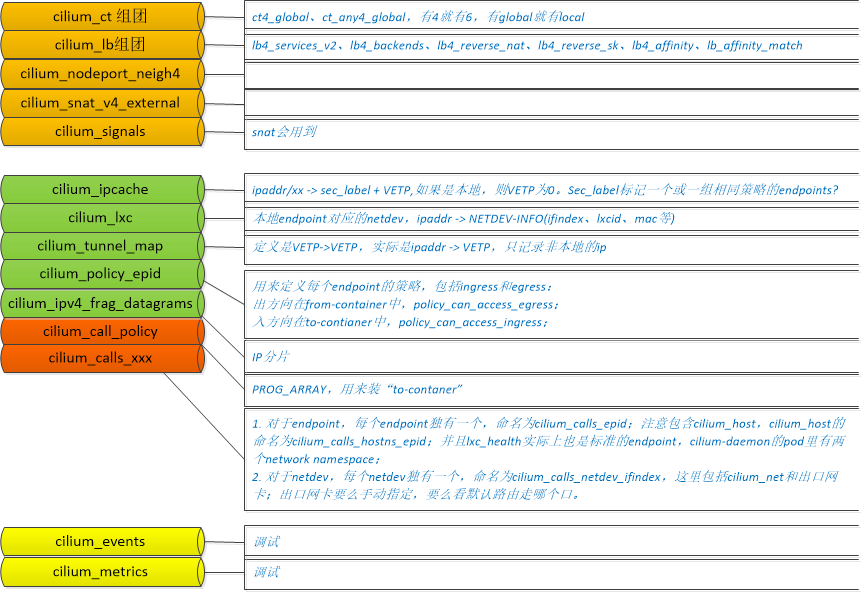

1.2 Cilium中ebpf map的构成

2. Cilium datapath加载流程

2.1 公共ebpf map的初始化

cilium有很多公用的ebpf map,这些map在ebpf prog加载前被创建:

runDaemon() =>NewDaemon() =>Daemon.initMaps()

- cilium_call_policy,PROG_ARRAY,用来装“to-contaner”

- cilium_ct4_global,CT表,for tcp

- cilium_ct_any4_global,CT表,for non-tcp

- cilium_events,

- cilium_ipcache,ip+mask -> sec_label + VETP,如果是本地,则VETP为0

- cilium_ipv4_frag_datagrams

- cilium_lb4_affinity

- cilium_lb4_backends

- cilium_lb4_reverse_nat

- cilium_lb4_reverse_sk

- cilium_lb4_services_v2

- cilium_lb_affinity_match

- cilium_lxc,本地endpoint对应的netdev,ip -> NETDEV-INFO

- cilium_metrics

- cilium_nodeport_neigh4

- cilium_signals

- cilium_snat_v4_external

- cilium_tunnel_map,ip -> VETP,只记录非本地的ip

2.2 基础网络构建(init.sh)

2.2.1 初始化参数

- LIB=/var/lib/cilium/bpf,bpf源码所在目录

- RUNDIR=/var/run/cilium/state,工作目录

- IP4_HOST=10.17.0.7,cilium_host的ipv4地址

- IP6_HOST=nil

- MODE=vxlan,网络模式

- NATIVE_DEVS=eth0,出口网卡,可以手动指定,没指定的话就看默认路由走那个口

- XDP_DEV=nil

- XDP_MODE=nil

- MTU=1500

- IPSEC=false

- ENCRYPT_DEV=nil

- HOSTLB=true

- HOSTLB_UDP=true

- HOSTLB_PEER=false

- CGROUP_ROOT=/var/run/cilium/cgroupv2

- BPFFS_ROOT=/sys/fs/bpf

- NODE_PORT=true

- NODE_PORT_BIND=true

- MCPU=v2

- NODE_PORT_IPV4_ADDRS=eth0=0xc64a8c0

- NODE_PORT_IPV6_ADDRS=nil

- NR_CPUS=64

2.2.2 具体工作

1)创建了cilium_host和cilium_net;

2)如果是vxlan模式,添加并设置vxlan口cilium_vxlan;

3)编译并加载cilium_vxlan相关的prog和map;

2个map:

- cilium_calls_overlay_2,每个endpoint都有自己独立的tail call map,2是init.sh脚本固定写死的ID_WORLD;

- cilium_encrypt_state

6个prog:

- from-container:bpf_overlay.c

- to-container:bpf_overlay.c

- cilium_calls_overlay_2【1】 = __send_drop_notify:lib/drop.h

- cilium_calls_overlay_2【7】 = tail_handle_ipv4:bpf_overlay.c

- cilium_calls_overlay_2【15】= tail_nodeport_nat_ipv4:lib/nodeport.h

- cilium_calls_overlay_2【17】= tail_rev_nodeport_lb4:lib/nodeport.

4)删除出口网卡已经挂载的ebpf程序(from-netdev和to-netdev)

5)加载LB相关ebpf和map;

tc exec bpf pin /sys/fs/bpf/tc/globals/cilium\_cgroups\_connect6 obj bpf\_sock.o type sockaddr attach\_type connect6 sec connect6

tc exec bpf pin /sys/fs/bpf/tc/globals/cilium\_cgroups\_post\_bind6 obj bpf\_sock.o type sock attach\_type post\_bind6 sec post\_bind6

tc exec bpf pin /sys/fs/bpf/tc/globals/cilium\_cgroups\_sendmsg6 obj bpf\_sock.o type sockaddr attach\_type sendmsg6 sec sendmsg6

tc exec bpf pin /sys/fs/bpf/tc/globals/cilium\_cgroups\_recvmsg6 obj bpf\_sock.o type sockaddr attach\_type recvmsg6 sec recvmsg6

tc exec bpf pin /sys/fs/bpf/tc/globals/cilium\_cgroups\_connect4 obj bpf\_sock.o type sockaddr attach\_type connect4 sec connect4

tc exec bpf pin /sys/fs/bpf/tc/globals/cilium\_cgroups\_post\_bind4 obj bpf\_sock.o type sock attach\_type post\_bind4 sec post\_bind4

tc exec bpf pin /sys/fs/bpf/tc/globals/cilium\_cgroups\_sendmsg4 obj bpf\_sock.o type sockaddr attach\_type sendmsg4 sec sendmsg4

tc exec bpf pin /sys/fs/bpf/tc/globals/cilium\_cgroups\_recvmsg4 obj bpf\_sock.o type sockaddr attach\_type recvmsg4 sec recvmsg4

6)XDP、FLANNEL、IPSEC相关初始化暂未研究

2.3 剩余的初始化工作

1)cilium_host的datapath

tc[filter replace dev cilium_host ingress prio 1 handle 1 bpf da obj 554_next/bpf_host.o sec to-host]

tc[filter replace dev cilium_host egress prio 1 handle 1 bpf da obj 554_next/bpf_host.o sec from-host]

说明:加载了2 + 5 个prog,1个PROG_ARRAY map,1个cilium_policy_00554 map

- PROG:

from-host、to-host- PROG_ARRAY_MAP:

cilium_calls_hostns_00554(554是epid)- PROG IN PROG_ARRAY_MAP:

cilium_calls_hostns_00554【1】= __send_drop_notify

cilium_calls_hostns_00554【7】= tail_handle_ipv4_from_netdev => tail_handle_ipv4(ctx,false)

cilium_calls_hostns_00554【15】= tail_nodeport_nat_ipv4

cilium_calls_hostns_00554【17】= tail_rev_nodeport_lb4

cilium_calls_hostns_00554【22】= tail_handle_ipv4_from_host => tail_handle_ipv4(ctx, true)

2)cilium_net的datapath

tc[filter replace dev cilium_net ingress prio 1 handle 1 bpf da obj 554_next/bpf_host_cilium_net.o sec to-host]

- 说明:加载了1 + 5个prog,1个PROG_ARRAY map

- PROG:

to-host- PROG_ARRAY_MAP:

cilium_calls_netdev_00004(4是ifindex,ip link命令可以查看)- PROG IN PROG_ARRAY_MAP:

cilium_calls_netdev_00004【1】= __send_drop_notify

cilium_calls_netdev_00004【7】= tail_handle_ipv4_from_netdev => tail_handle_ipv4(ctx,false)

cilium_calls_netdev_00004【15】= tail_nodeport_nat_ipv4

cilium_calls_netdev_00004【17】= tail_rev_nodeport_lb4

cilium_calls_netdev_00004【22】= tail_handle_ipv4_from_host => tail_handle_ipv4(ctx, true)

3)eth0的datapath

tc[filter replace dev eth0 ingress prio 1 handle 1 bpf da obj 554_next/bpf_netdev_eth0.o sec from-netdev]

tc[filter replace dev eth0 egress prio 1 handle 1 bpf da obj 554_next/bpf_netdev_eth0.o sec to-netdev]

**说明:**加载了2+5个prog,1个PROG_ARRAY map

- PROG:

from-netdev、to-netdev- PROG_ARRAY_MAP:

cilium_calls_netdev_00002(4是ifindex,ip link命令可以查看)- PROG IN PROG_ARRAY_MAP:

cilium_calls_netdev_00002【1】= __send_drop_notify

cilium_calls_netdev_00002【7】= tail_handle_ipv4_from_netdev => tail_handle_ipv4(ctx,false)

cilium_calls_netdev_00002【15】= tail_nodeport_nat_ipv4

cilium_calls_netdev_00002【17】= tail_rev_nodeport_lb4

cilium_calls_netdev_00002【22】= tail_handle_ipv4_from_host => tail_handle_ipv4(ctx, true)

4)lxc_health的datapath,跟增加一个pod的datapath是完全一样的

tc[filter replace dev lxc_health ingress prio 1 handle 1 bpf da obj 908_next/bpf_lxc.o sec from-container]

说明:加载了1+4+1个prog,1个PROG_ARRAY map,1个cilium_policy_00908 map

- PROG:

from-container- PROG IN PROG_ARRAY_MAP:

cilium_calls_00908【1】= __send_drop_notify

cilium_calls_00908【6】= tail_handle_arp

cilium_calls_00908【15】= tail_nodeport_nat_ipv4

cilium_calls_00908【17】= tail_rev_nodeport_lb4

cilium_call_policy[908] = handle_policy(to-container好像已经废弃了)